Technologies

There are many technologies for implementing Big Data and AI. With a preponderance of the open-source eco-system (around Apache Hadoop), the domain is very innovative. NoSQL databases (MongoDB, HBase, CouchDB or Redis for example), application architectures (Data Lake), cloud infrastructures, integration tools (Talend, Nifi...), tools and languages for data science and AI (Python, Scala, Knime, Dataiku...), data virtualization, in-memory...

Technologies are multiplying and choices can be bewildering. Discover the fundamentals of available technologies, and take advantage of our tutorials dedicated to Data tools and languages.

Data technological fundamentals

View all content-

Data Governance

Exploring the Benefits of Data Catalogs for International Organizations

If you missed our recent webinar, “Exploring the Benefits of Data Catalogs for International Organizations” you’re in luck! The replay is now available, offering you a second chance to know how a data catalog can significantly improve how your organization manages and utilizes its data leading to better outcomes and more efficient operations. What you will…

-

Data Trends

Implementing a Cloud Data Platform: The Do’s and Don’t

The Cloud is presenting great opportunities in terms of performance and scalability for companies. However, considering some golden rules as a starting point to mitigate risks and ensure your company is ready for a successful cloud strategy implementation is highly advisable. We already stated how powerful Cloud Data Platforms are for companies, enabling new…

-

CRM

Marketing Strategy: why and how to implement a Customer Data Platform

In my previous article, I explained that, this year again, we would see a surge in business digitalisation, and that this would accelerate some already observed trends: namely, hyper-automation, AI directly embedded in business processes, Responsive Customer Experience… And I concluded with enterprises’ increasingly high expectations: i.e. support beyond new CRM platform implementation and third-party maintenance. In this…

-

Data technological fundamentals

How to build the next generation Data Lake – Business Session

Does your Data Architecture support your current and future analytics needs? Are you going back and forth, wondering whether the best solution is a data warehouse or a data lake? What are the advantages and disadvantages of each option? Modern Cloud Data Platforms are making this choice easier, by bringing the best of both worlds.…

-

Data technological fundamentals

Mapping and open data: understanding the bases for your business

Open data and data science today enable us to analyse geographical data by measuring interactions that could otherwise only be modelled with great difficulty. Demonstration.

-

Data technological fundamentals

From the data lake to the agile data warehouse: decision-making in the big data era

How can the data lake be combined with a data warehouse that already serves as the real keystone of the business’s acquired holdings of structured data?

-

Data technological fundamentals

Controlling (big) data quality with tagging

Whatever your business or industry, any Big Data platform includes multiple and fundamentally heterogeneous data sources including (most frequently) data from your transactional databases, from your CRM database and from other digital marketing data sources (analytics, campaigns, targeting et al.). Back to Big Data basics: digital data collection This particular component of the marketing ecosystem has got…

Data tutorials, tools and languages

View all content-

Data tutorials, tools and languages

Spark Structured Streaming: performance testing

Spark is an open source distributed computing framework that is more efficient than Hadoop, supports three main languages (Scala, Java and Python) and has rapidly carved out a significant niche in Big Data projects thanks to its ability to process high volumes of data in batch and streaming mode. Its 2.0 version introduced us to…

-

Data tutorials, tools and languages

Spark Structured Streaming: from data transformation to unit testing

Spark is an open-source distributed computing framework that is more efficient than Hadoop, supports three main languages (Scala, Java and Python). It has rapidly carved out a significant niche in Big Data projects thanks to its ability to process high volumes of data in batch and streaming mode. Its 2.0 version introduced us to a…

-

Data tutorials, tools and languages

Spark Structured Streaming: from data management to processing maintenance

Spark is an open source distributed computing framework that is more efficient than Hadoop, supports three main languages (Scala, Java and Python) and has rapidly carved out a significant niche in Big Data projects thanks to its ability to process high volumes of data in batch and streaming mode. Its 2.0 version introduced us to…

-

Data Visualization

Color in Dashboarding: a Love-Hate relationship

When it’s time to add some colors in your dashboards, it can easily get complicated to make the good choice for an understandable result by all. Colors have a strong impact on your dashboard, they have a meaning and need to be used wisely for an effective result. In this on-demand webinar, Jean-Philippe Favre, Data…

-

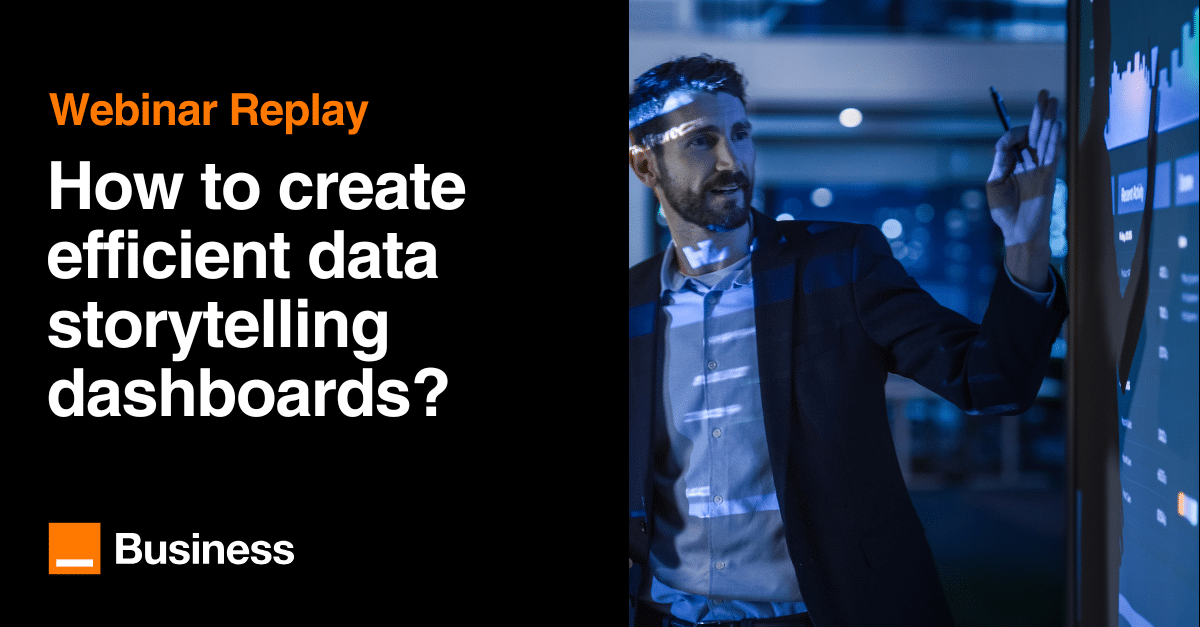

Data Visualization

How to create efficient data storytelling dashboards?

Designing dashboards today seems a very simple task, thanks to modern BI tools. However designing efficient dashboards that are useful and that people want to use is not the same story. A good dashboard must follow specific rules that our Data Artists experts explained in this webinar available in replay. How to tell Data-Stories in…

-

Data tutorials, tools and languages

How to use the Python integrator in PowerBI?

The Python integration in Power BI is a huge step forward from Microsoft. It opens a wide range of possibilities in terms of extracting and cleaning your data as well as creating nice-looking and full customized visuals. Let’s see how it works and how to set-up your Python environment in your Power BI Desktop. As…

-

Data tutorials, tools and languages

[TUTORIAL] First steps with Zeppelin

Zeppelin is the ideal companion for any Spark installation. It is a notebook that allows you to perform interactive analytics on a web browser. You can execute Spark code and view the results in table or graph form. To find out more, follow the guide!

-

Data tutorials, tools and languages

Tutorial: How to Install a Hadoop Cluster

You have read many articles on Hadoop and now you want to get familiar with it, but how do you install and apply this new technology? The recommended approach is to install a turnkey virtualized machine supplied by a major publisher.

Technological solutions

View all content-

Technological solutions

How to Optimize AWS Cloud with the Well-Architected Framework

As organizations spend more time in the cloud, they begin to understand how their cloud usage seemingly evolves endlessly over time. How can you continuously work on security, reliability, and efficiency in your cloud infrastructure while remaining cost-effective? A general answer to this question is the Well-Architected Framework, often abbreviated as WAF. Well-Architected Framework When…

-

Data & AI culture

Understanding Splunk: A Powerful Tool for Data Analysis and Security

In this blog, we’ll provide a clear introduction to Splunk: What it is, how it works, its most common use cases, and why it’s so popular. By keeping things straightforward, our aim is to offer value to both technical and non-technical readers. What is Splunk? This question has been asked many times and it’s impressive…

-

Data Marketing

Adobe’s Suite: revolutionizing customer engagement and driving business growth

In today’s competitive business landscape, companies must find innovative ways to leverage data and understand their customers better. Adobe’s suite—comprising the Real-Time Customer Data Platform (CDP), Journey Optimizer, and Customer Journey Analytics—provides powerful tools to enhance customer engagement and drive growth. This article explores how these Adobe applications transform customer experiences, based on the Forrester…

-

Technological solutions

Sovereign Cloud: how the geopolitical situation leads to an increased need for data sovereignty

Data sovereignty and compliance have had a major impact on IT decisions, and organisations need an adaptable data infrastructure to meet new privacy laws. Understanding data sovereignty is essential for strategic IT planning. That’s why we’ve written an ebook explaining the concept of “Sovereign Cloud” and what it means within the EU. This is what…

-

Data & AI culture

How to choose an entity type in Semarchy?

You just implemented Semarchy as Master Data Management and you are faced with one of the first questions a developer has to ask: what entity type should I choose for each of my data objects? Should I choose a basic entity, an ID matched or a fuzzy matched? And what impact might this have on…

-

Technological solutions

Creating a sustainable cloud strategy for the future

The cloud helps you innovate, but there are many pitfalls. Thinking about how to use and evolve cloud solutions in a secure and responsible manner is complex and requires a plan. Cloud Strategy for the future

-

Customer Experience

Can you be customer-centric without full visibility of the customer journey?

The challenges facing businesses today in understanding and optimizing customer journeys are significant. With so many channels and touchpoints, it’s easy for blind spots to emerge, leading to lost opportunities, frustrated customers, and lost revenue. This is why customer journey analytics is crucial for companies as it allows them to gain a holistic view of…

-

Data Strategy

Golden rules for businesses when moving to the Cloud

We already know why Cloud Data Platform are a new Eldorado for enterprises. Cloud Technology is accelerating companies in their digital transformation journey by offering an infinite scalability, a centered and shared data and an easy infrastructure maintenance for businesses. But let’s take a look a little bit deeper and ask ourselves what are the…

-

Innovation

How Low-Code Application Platforms bring value to your business?

In a recent study, Gartner estimates that 65% of all applications development will be low code by 2024. Why Low Code Application Platform (LCAP) is revolutionizing digital transformation for companies? We discuss with our experts how this solution enable companies to deliver quicker with simplicity & agility. Our Orange Business Expert Podcast strives to answer…

-

Technological solutions

How to accelerate your decision-making process with Tableau

Do you have lots of standalone files used for reporting? Are you spending a huge effort in producing and sharing reports across your organization? How could you ease the reporting process for your decision-makers? How to optimize your reporting life cycle With Tableau, we could optimize your reporting life cycle and bring visual analytics to…

-

Technologies

How to build the next generation Data Lake? Technical Session

You might have worked on the same Data Warehouse for some time which makes it now more complicated to fit with the new needs and challenges you might have with the new technologies. So, it is maybe time to consider a change for you with a new generation Data Lake? If you consider switching to…

-

Technological solutions

Measuring to take action: reducing greenhouse gas emissions through effective reporting

Greenhouse gas (GHG) emission reduction objectives, based on scientific work, are now being formalized in international treaties and the “carbon budget” notion is starting to gain State-level traction. However, even though companies are now obligated to submit reports on their GHG emissions to authorities, these practices remain poorly resourced and investment in the reporting process…

-

Technological solutions

Big Data, unleashing the potential of company archives

Whatever their size or business sector, enterprises are required to preserve certain documents for a given period. But how is Big Data changing document archiving?

-

Technological solutions

What if the Initial Promise of the Web was, in fact, a Lie?

The initial promise of digital, at the time when it is still called web, was simple, clear and unstoppable.

![Software is (still) eating the world [Partie 2]](https://perspective.orange-business.com/wp-content/uploads/2021/02/marketing-still-eating-part2-cdp.jpg)