AI governance evolves alongside advancing AI capabilities. As generative AI progresses from producing outputs to acting autonomously, organizations are witnessing a shift from early RAG-based knowledge assistants to agentic systems capable of executing business processes.

These extended capabilities bring opportunities, but also significant risks. With the rise of AI-related incidents, a robust governance framework becomes vital: not as a brake on innovation, but as a foundation that promotes responsible adoption, aligns AI with business strategy, and safeguards stakeholders in an environment of accelerating technological change.

AI governance defines how organizations ensure the responsible use of AI through policies, processes, accountability structures, and technical safeguards that span the AI lifecycle, development, deployment, and maintenance. A well-designed governance model aligns operations with ethical standards and regulatory expectations, ensuring reliability and trust.

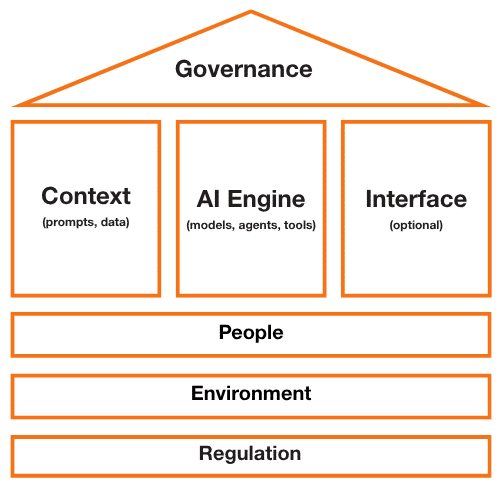

The complexity of governing agentic systems lies in their multi-layered nature: context, models, agents, tools, interfaces, infrastructure, and, crucially, the people who interact with or are impacted by them. Effective governance must consider all these components, as well as the broader regulatory and environmental landscape.

Agentic AI governance can be visualized as a layered architecture, where governance serves as the overarching structure ensuring alignment, safety, and accountability across all system components. Each layer, from the context that informs the system, to the AI engine that acts, and the interface that mediates human interaction, is influenced by and dependent on the people, environment, and regulatory frameworks surrounding it.

Figure 1. This framework illustrates how governance oversees the full ecosystem, spanning context, AI engines, and interfaces, while being grounded in people, environment, and regulation. It emphasizes that responsible governance is both structural and relational, connecting technical components with human and societal dimensions.

Continuing our Agentic AI for Enterprises series, which began with Core Concepts for Choosing Autonomy with Intent and Scaling by Design, this third article explores how human-centered, adaptive governance enables secure and trustworthy agentic systems — ensuring innovation delivers sustainable business value.

Governance principles

Governance is not a constraint, it is a balance between innovation and accountability, providing a roadmap to safely integrate evolving AI capabilities into enterprise operations. Core governance principles include:

- Accountability: Human oversight must remain central. Systems should operate under clear accountability, ensuring control and traceability.

- Transparency: Users must understand how the system functions, is deployed, monitored, and managed, and always be informed when interacting with AI.

- Reliability & Safety: Systems must be robust against misuse and errors through continuous monitoring, feedback loops, and performance metrics calibrated to acceptable thresholds.

- Privacy & Security: Protecting personal data and upholding consent and privacy rights.

- Sustainability: AI systems should be designed and operated with awareness of their environmental footprint.

Trust, risk mitigation, and security must be embedded from the start by considering:

- Alignment with organizational strategy and stakeholder needs.

- Regulatory context (e.g., EU AI Act, ISO standards) and role definitions (provider, deployer, distributor).

- Risk classification under applicable frameworks (unacceptable, high, limited, minimal).

- A holistic design approach that accounts for technological, human, and environmental impacts.

- Evaluation frameworks spanning multimodal capabilities (text, image, audio, agents).

- Proactive risk management through threat modeling, audits, and red teaming.

Strong governance establishes the foundation for responsible AI adoption, but as systems evolve from static models to autonomous, context-aware agents, governance must evolve too. Traditional principles of accountability, transparency, and safety remain essential, yet agentic systems introduce new dynamics.

Governance for Agentic Systems

Agentic systems go beyond output generation. They plan, act, and interact with other systems. Governance, therefore, must be dynamic, adapting to evolving operational realities, tools, and contexts.

Key considerations include:

- Purpose Alignment: Each agent and tool must serve a well-defined function aligned with business objectives. Roles, permissions, and performance indicators should be explicit and continuously monitored.

- Govern by Design: Embed compliance, privacy, and responsible AI principles into the system architecture from inception, scaling governance with maturity, not over-engineering early prototypes.

- Impact Assessment: Evaluate societal, ethical, and business impacts early, integrating findings into design and deployment decisions.

- Data Minimization: Limit data access and processing to what is necessary, using context scoping and role-based access controls to ensure accuracy and recency.

- Scope Enforcement: Define and technically enforce levels of autonomy, ensuring agents operate strictly within their assigned boundaries.

- Agent Catalogue: Maintain visibility into agent creators, usage, performance, and alignment with KPIs to enable lifecycle management and accountability.

- Continuous Monitoring: Establish ongoing evaluation against metrics for performance, security, and compliance, feeding insights back into refinement cycles.

- Testing: Conduct pilot trials with end-users to gather actionable feedback before full deployment.

- Documentation: Capture design decisions, dependencies, and operational context comprehensively to ensure reproducibility and traceability.

Oversight framework

Compliance & Risk

- Risk tiering, documentation (model and agent cards), audits, and impact assessments.

- Alignment with evolving regulatory and ethical standards.

Quality & Reliability

- Retrieval and grounding accuracy, coherence, task success, and recovery from failure.

- Monitoring of latency and throughput performance.

Safety, Security & Privacy

- Prevention of harmful content or bias.

- Tool and code security, secret management, and privacy controls.

- Authentication, authorization, and access management.

Agentic Controls

- Oversight of task adherence, intent resolution, and tool invocation.

- Guardrails including sandboxing, rate limits, and spending caps.

- Human override and escalation mechanisms.

Observability & Operations

- Real-time monitoring, tracing, and auditing.

- Red teaming, evaluation suites, and incident response protocols.

- Lifecycle management and rollback mechanisms.

User Experience & Sustainability

- Human-in-the-loop design, explainability, and feedback integration.

- Secure, accessible, and transparent interfaces.

- Sustainable infrastructure minimizing energy and compute costs.

Governance for agentic systems is a continuous process, not a fixed framework. It goes beyond control, enabling safe autonomy and responsible innovation. By blending structured oversight with adaptive mechanisms, enterprises can empower agents to act with confidence while preserving accountability and alignment with human and organizational values. In doing so, governance becomes not a constraint but an enabler of trusted and scalable AI innovation.

Closing Insights

Effective governance is the foundation for trusted and scalable agentic AI. It aligns innovation with accountability, ensuring that AI systems advance business goals safely, transparently, and sustainably.

- People first. People do not use technology they do not trust. AI must be designed, developed, and deployed around human needs and values.

- Governance as an enabler. It is not an afterthought or constraint; it unlocks the potential of Agentic AI while ensuring safety, transparency, accountability, and compliance.

- Human oversight remains essential. Regardless of AI progress, human-in-the-loop mechanisms are vital to maintain trust, manage risk, and uphold ethical integrity.

- Inclusive creation. Today’s creators include non-technical users leveraging no-code tools like Microsoft Copilot 365; governance must therefore be intuitive, embedded, and accessible.

- Adaptive and continuous. Governance should evolve with AI capabilities, combining structured oversight with real-time monitoring and improvement.

- Culture drives adoption. Building awareness, training, and shared responsibility fosters trust and empowers people to use agentic AI effectively and responsibly.

In essence, agentic governance turns autonomy into trusted capability, enabling innovation that is safe, accountable, and human-aligned.

Looking Ahead

Technology and policy alone cannot ensure adoption, culture does. The success of agentic AI depends on people: building trust through communities, training, and hands-on experimentation. Organizations must equip their workforce to engage responsibly and confidently with AI. This means fostering awareness, promoting transparency, and encouraging participation in AI initiatives. Ultimately, the path to trusted agentic AI lies in empowering people to use it effectively, securely, and ethically.

Future installments will cover:

Adoption. Equipping employees and organizations to use agentic AI effectively.

Comments (0)

Your email address is only used by Business & Decision, the controller, to process your request and to send any Business & Decision communication related to your request only. Learn more about managing your data and your rights.