74% of business leaders believe the benefits of generative AI will outweigh the concerns, according to the Capgemini Research Institute report Harnessing the value of generative AI: Top use cases across industries. Yet only 19% of companies have launched concrete initiatives. So where to begin? This article outlines the key steps.

Turning AI Promise into Business Value

See moreWhat are the prerequisites for generative AI? What key questions should be addressed beforehand? Even for professional data scientists, embarking on an AI journey can be challenging, as the technology often forces companies to rethink how they work. Generative AI is no exception. Three pillars are essential to successfully launch the development of a use case.

Step #1: don’t start from scratch!

Today, there are plenty of pre-trained language models (LLMs) available that can help address a range of objectives right from the start of a project, especially through conversational assistants. The same applies to image recognition. As a result, it is not only possible but strongly recommended to leverage existing resources provided by the community, whether through open source algorithms or commercial offerings like Microsoft Azure. Teams already have access to algorithms capable of recognizing an image or an object, or identifying verbs, adjectives, complements or direct objects in a sentence. This represents a significant time-saver.

If the available resources fall short, it is in the team’s best interest to conduct a market analysis early on to identify and test existing solutions that could be used without reinventing the wheel.

A generative AI project is not all that different from a traditional AI or data science project. Agility and the ability to expand the scope gradually are key success factors.

A generative AI project is not so different from a traditional artificial intelligence or data science project. Agility and the ability to gradually expand its scope are essential success factors.

Step #2: expand the scope gradually

A generative AI project is not so different from a traditional artificial intelligence or data science project. As a result, if you start off with too many features or excessive complexity, the goal will feel too far out of reach and be difficult to achieve. Not only will the development time be disproportionately long, but you also risk encountering a multitude of issues at once, leading to project failure.

The solution is to build a conversational assistant gradually. Start with an assistant that can initially handle only 20% of the problems or use cases, then improve it step by step. Agility and the ability to scale the scope progressively are key success factors in any generative AI initiative.

At the start of a project, it’s essential to work with business teams to clearly define the various features you want to include in the conversational assistant. These features should then be grouped into blocks of functionality or use cases, using two key axes:

A business axis, defined by the future users

A technical axis, where experts assess the algorithmic complexity

The goal is to begin with “quick wins” features that offer high business value and low technical complexity. Then, based on user feedback, the assistant can be enriched in each sprint with new features, additional use cases, or new data sources. This is an iterative process in which each development cycle is evaluated to prioritize the most impactful improvements.

Step #3: put your algorithm to the test

You not only need to test your algorithm with business users, but you also need to do it as early as possible. Do not wait for the product to be perfect before presenting it. However, once it is in the hands of users, you must also be able to iterate quickly and easily. This requires enough flexibility to automate delivery and model training in order to continuously enhance features.

Scalability is another key component to consider. Depending on the data used—whether internal only or also including open data and other external sources—the training volumes may become significant or even massive. That is why having a scalable or at least easily upgradable infrastructure is also a critical success factor.

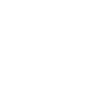

How can you facilitate the adoption of generative AI?

To answer this question, we drew on real use cases from some of our clients. Here is how generative AI helped them overcome challenges and accelerate their projects.

Case 1: you want to develop your own algorithm

Using generative AI allows you to speed up the labeling phase (images, text, etc.). This is a major advantage, as data labeling represents a significant share of computer vision and NLP projects. Current tools make it possible to collect large volumes of labeled data. The result: you can train your models on premise with these datasets while keeping full control over your algorithm from end to end.

Second use case: you don’t have enough data to train your LLM

Generative AI can help you build a substantial training base. For instance, you could ask GPT (or another model) to generate a thousand different ways of phrasing a single question, across various styles and registers. This acceleration effect allows you to train your own model much faster and more effectively.

Third use case: is a hybrid private / open tool possible?

This question often arises when companies want to add features specific to their own model. In that case, it is necessary to develop an in-house conversational assistant. For more generic questions, however, it is entirely possible to rely on a solution such as Azure OpenAI. Generative AI therefore addresses both needs, but through two different tools: one developed internally to handle “private” intentions specific to the company, and the other, such as Azure OpenAI, to cover “open” generic queries.

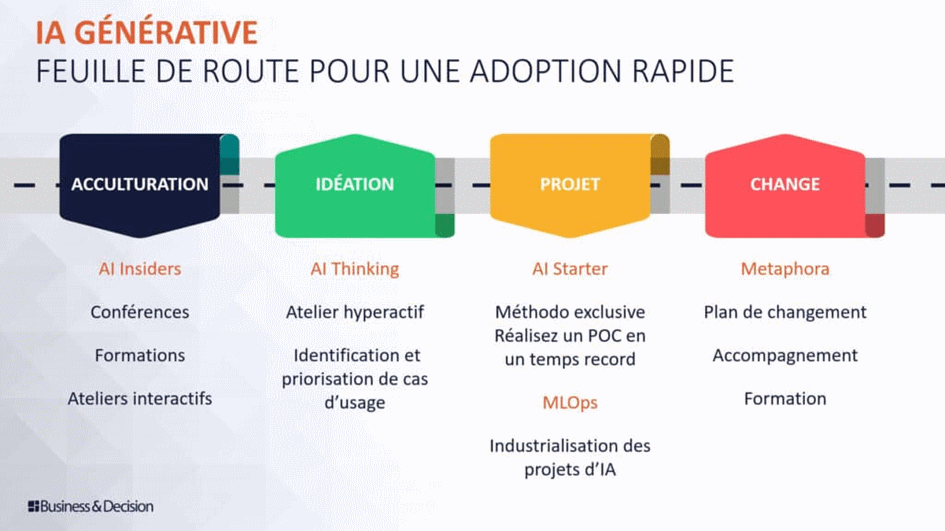

How to implement a gradual approach to generative AI

The principle of generative AI is to enrich the model step by step with increasingly relevant features and use cases. This approach is less straightforward than it may seem. Why? Because using tools like ChatGPT directly within the enterprise is not recommended due to data confidentiality concerns.

1. The zero-shot mode

The first step is to deploy a ChatGPT-like solution at enterprise scale, but with a tool that ensures confidentiality. This usually means relying on a preconfigured Large Language Model (LLM) designed for enterprise use (for example, Azure OpenAI). The idea is to take this LLM and make it available to employees. This is what we call the zero-shot stage.

2. Few-shot mode

At this stage, the conversational bot relies on context and keeps a history of prompts. We are now entering more specific professional use cases. Few-shot mode consists in refining the assistant’s responses. Each time the model is queried, as much contextual information as possible is provided, a practice known as prompt engineering. The result is answers that are more closely aligned with the company’s vocabulary and business challenges.

3. Agents

The next step is to integrate these models into the company’s workflows so that they can be used seamlessly, without disrupting existing processes. In addition to context, we introduce memory (indexing) and actions (external tools). The assistant can then browse indexed data as input and connect with external tools, for instance to send answers to an API.

4. Training and retraining

Finally, the models can be trained, retrained, or even overtrained with the company’s own language and ontology. This typically involves customizing the final layers of the model. The assistant thus becomes increasingly effective, tailored to users, and fully connected to internal tools.

This is how generative AI can progressively create tangible value within the enterprise.

Generative AI should therefore be viewed first and foremost as an artificial intelligence project, with all the complexity that project development phases can involve. As with many AI initiatives, it can quickly become intricate. To get started, it is advisable to work with experts in the field, seek the right guidance, and take the time to carefully weigh the pros and cons of each solution.

Comments (0)

Your email address is only used by Business & Decision, the controller, to process your request and to send any Business & Decision communication related to your request only. Learn more about managing your data and your rights.